Local LLM Automation & Private AI Processing

Local LLM Automation & Private AI Processing

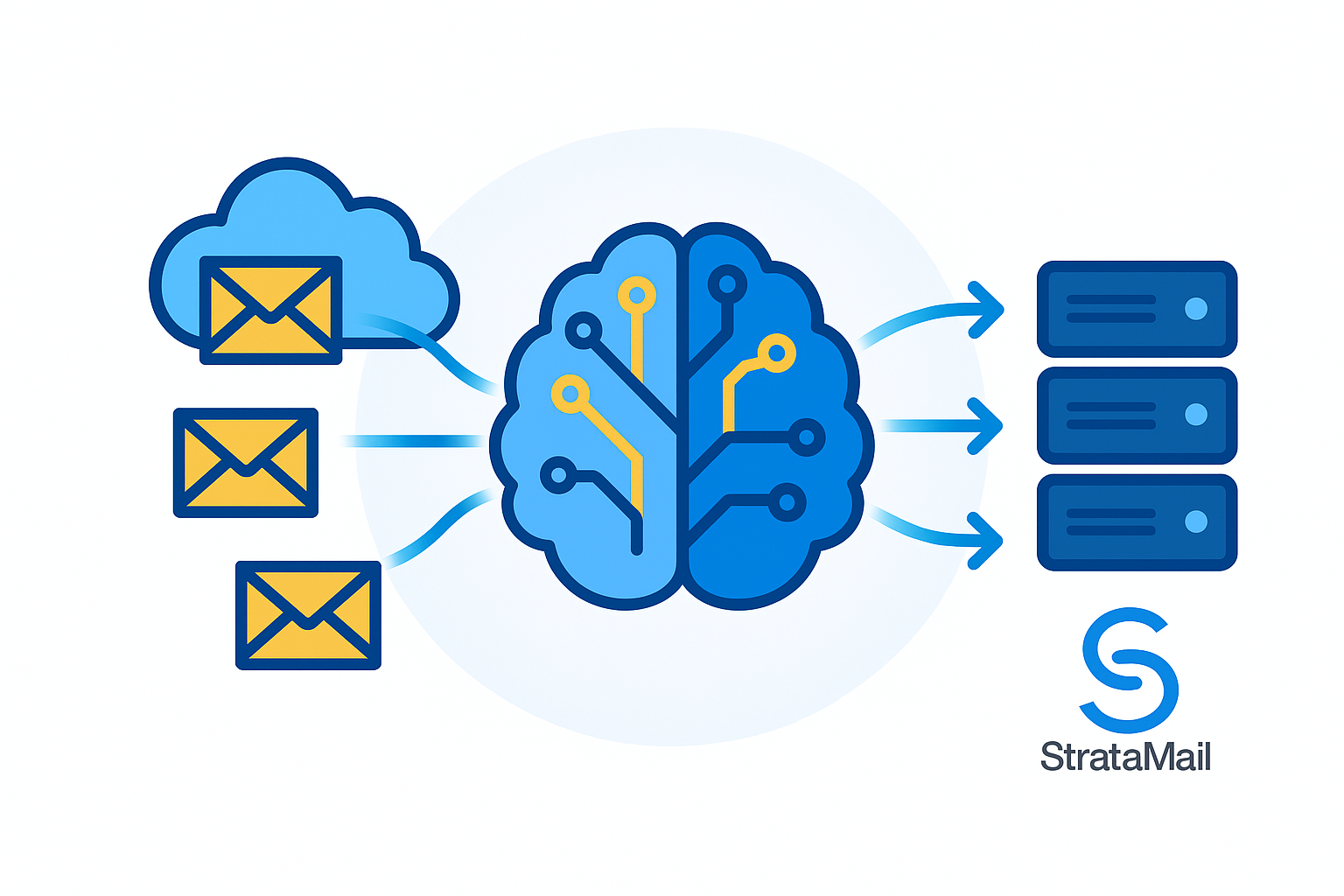

Local LLMs allow you to run AI models securely inside your own server, VPS, or Proxmox cluster— with no data leaving your environment. This ensures privacy, compliance, and full control while enabling powerful workflow automation.

What Local LLM Automation Solves

Many teams rely on staff to manually review emails, documents, forms, and logs. Local LLMs automate extraction, summarization, classification, routing, decision-making, and system updates— all without exposing data to cloud AI services.

Common Use Cases

- Email Parsing & Routing: Automate intake, summarization, decisions, and workflow triggers.

- Ticketing & Support: Categorize issues, generate replies, populate fields, validate requests.